On Thursday, OpenAI announced that they had trained a language model. They used a large training dataset and showed that the resulting model was useful for downstream tasks where training data is scarce. They announced the new model with a puffy press release, complete with this animation (below) featuring dancing text. They demonstrated that their model could produce realistic-looking text and warned that they would be keeping the dataset, code, and model weights private. The world promptly lost its mind.

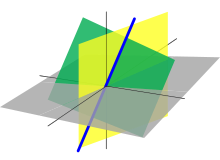

For reference, language models assign probabilities to sequences of words. Typically, they express this probability via the chain rule as the product of probabilities of each word, conditioned on that word’s antecedents ![]() Alternatively, one could train a language model backwards, predicting each previous word given its successors. After training a language model, one typically either 1) uses it to generate text by iteratively decoding from left to right, or 2) fine-tunes it to some downstream supervised learning task.

Alternatively, one could train a language model backwards, predicting each previous word given its successors. After training a language model, one typically either 1) uses it to generate text by iteratively decoding from left to right, or 2) fine-tunes it to some downstream supervised learning task.

Training large neural network language models and subsequently applying them to downstream tasks has become an all-consuming pursuit that describes a devouring share of the research in contemporary natural language processing.

Continue reading “OpenAI Trains Language Model, Mass Hysteria Ensues”

Long-term AI safety is an inherently speculative research area, aiming to ensure safety of advanced future systems despite uncertainty about their design or algorithms or objectives. It thus seems particularly important to have different research teams tackle the problems from different perspectives and under different assumptions. While some fraction of the research might not end up being useful, a portfolio approach makes it more likely that at least some of us will be right.

Long-term AI safety is an inherently speculative research area, aiming to ensure safety of advanced future systems despite uncertainty about their design or algorithms or objectives. It thus seems particularly important to have different research teams tackle the problems from different perspectives and under different assumptions. While some fraction of the research might not end up being useful, a portfolio approach makes it more likely that at least some of us will be right.

On the attack side, adversarial perturbations

On the attack side, adversarial perturbations