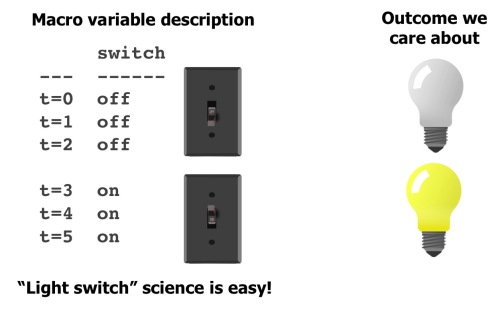

Consider a little science experiment we’ve all done, to find out if a switch controls a light. How many data points does it usually take to convince you? Not many! Even if you didn’t do a randomized trial yourself, and observed somebody else manipulating the switch you’d figure it out pretty quickly. This type of science is easy!

One thing that makes this easy is that you already know the right level of abstraction for the problem: what a switch is, and what a bulb is. You also have some prior knowledge, e.g. that switches typically have two states, and that it often controls things like lights. What if the data you had was actually a million variables, representing the state of every atom in the switch, or in the room?

Even though, technically, this data includes everything about the state of the switch, it’s overkill and not directly useful. For it to be useful, it would be better if you could boil it back down to a “macro” description consisting of just a switch with two states. Unfortunately, it’s not very easy to go from the micro description to the macro one. One reason for this is the “curse of dimensionality”: a few samples of a million dimensional space is considered very under-sampled, and directly applying machine learning methods using this type of data typically leads to unreliable results.

As an example of another thing that could go wrong, imagine that we detect, with p<0.000001, that atom 173 is a perfect predictor of the light being on or off. Headlines immediately proclaim the important role of atom 173 in production of light. A complicated apparatus to manipulate atom 173 is devised only to reveal… nothing. The role of this atom is meaningless in isolation from the rest of the switch. And this hints at the meaning of “macro-causality” – to identify (simple) causal effects, we first have describe our system at the right level of abstraction. Then we can say that flipping the switch causes the light to go on. While there exists a causal story involving all the atoms in the switch, electrons, etc., but this is not very useful.

Social science’s micro-macro problem

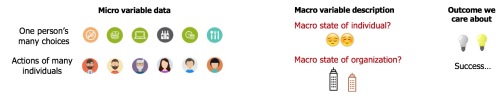

Social science has a similar micro-macro problem. If we get “micro” data about every decision an individual makes, is it possible to recover the macro state of the individual? You could ask the same where the micro-variables are individuals and you want to know the state of an organization like a company.

Currently, we use expert intuition to come up with macro-states. For individuals, this might be a theory of personality or mood and include states like extroversion, or a test of depression, etc. After dreaming up a good idea for a macro-state, the expert makes up some questions that they think reflect that factor. Finally, they ask an individual to answer these questions. There are many places where things can go wrong in this process. Do experts really know all the macro states for individuals, or organizations? Do the questions they come up with accurately gauge these states? Are the answers that individuals provide a reliable measure?

Most of social science is about answering the last two questions. We assume that we know what the right macro-states are (mood, personality, etc.) and we just need better ways to measure them. What if we are wrong? There may be hidden states underlying human behavior that remain unknown. This brings us back to the light switch example. If we can identify the right description of our system (a switch with two states), experimenting with the effects of the switch is easy.

Macro approaches and limitations

The mapping from micro to macro is sometimes called “coarse-graining” by physicists. Unfortunately coarse-graining in physics usually relies on reasoning based on the physical laws of the universe, allowing us, for instance, to analytically derive an expression allowing us to go from describing a box of atoms with many degrees of freedom to a simple description involving just three macro-variables: volume, pressure, and temperature.

The analytic approach isn’t going to work for social science. If we ask, “why didn’t Jane go to the party?”, an answer involving the firing of neurons in her brain is not very useful, even if it is technically correct. We want a macro-state description that gives us a a more abstract causal explanation, even if the connection with micro-states is not as clean as the ideal gas law.

There are some more data-driven approaches to coarse-graining. One of the things I work on, CorEx, says that “a good macro-variable description should explain most of the relationships among the micro-variables.” We have gotten some mileage from this idea, finding useful structure in gene expression data, social science data, and (in ongoing work) brain imaging, but it’s far from enough to solve this problem. Currently these approaches address course-graining without handling the causal aspect. One promising direction for future work is to jointly model abstractions and causality.

A long line of research sometimes called computational mechanics and developed by Shalizi and Crutchfield, among others, says that our macro-state description should be a minimal sufficient statistic for optimally predicting the future from the past. Here’s an older high-level summary and long list of other publications. One of the main problems with this approach for social science is that the sufficient statistics may not give us much insight for systems with many internal degrees of freedom. We would like to be able to structure our macro-states in a meaningful way. A similar approach also looks for compressed state space representations but focuses more on the complexity and efficiency of simulating a system using its macrostate description.

Eberhardt and Chalupka introduced me to the term “macro-causal” which they have formalized in the “causal coarsening theorem”. A review of their work using causal coarsening includes a nice example where they infer macro-level climate effects (El Nino) from micro-level wind and temperature data.

Finally, an idea that I really like but is still in its infancy is to find macro-states that maximize interventional efficiency. A good macro-state description is one that, if we manipulated it, would produce strong causal effects. Scott Aaronson gives a humorous critique of this work, “Higher level causation exists (but I wish it didn’t)“. I don’t think the current formulation of interventional efficiency is correct either, but I think the idea has potential.

It’s hard to be both practical and rigorous when it comes to complex systems involving human behavior. The most successful example of discovering a macro-causal effect, I think, comes from the El Nino example but this is still relatively easy compared to the problems in social science. In the climate case, they were able to restrict the focus to homogeneous array of sensors in an area where we expect to find only a small number of relevant macro-variables. For humans, we get a jumble of missing and heterogeneous data and (intuitively) feel that there are many hidden factors at play even in the simplest questions. These challenges guarantee lots of room for improvement.

Nice article, and it is a challenge for us in psychology too. Personally, I love Daniel Dennett’s “Two Black Boxes” story https://ase.tufts.edu/cogstud/dennett/papers/twoblackboxes.pdf, and I’m totally okay with “intentional”, “design”, and “physical” stances to the same system in different contexts. The explanations we use at different levels don’t really need to be strongly connected, they just need to work sufficiently well to be useful. I’m okay with that kind of instrumentalism.