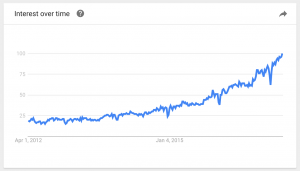

Interest in machine learning may be at an all-time high. Per Google Trends, people are searching for machine learning nearly five times as often as five years ago. And at the University of California San Diego (UCSD), where I’m presently a PhD candidate, we had over 300 students enrolled in both our graduate-level recommender systems and neural networks courses.

Much of this attention is warranted. Breakthroughs in computer vision, speech recognition, and, more generally, pattern recognition in large data sets, have given machine learning substantial power to impact industry, society, and other academic disciplines.

Like the internet before it, machine learning now stands poised to impact diverse areas of the economy. Whether you work in government, medical imaging, car services, the law, or recycling, there’s good reason to believe that machine learning will impact your life and finances.

For a researcher, there are many upsides to the current wave of enthusiasm. At this moment, machine learning presents fertile ground for scholarship. Across both the theory ⇔ hardware and methodology ⇔ application axes, there’s ample impactful work to be done. Even technically incremental work can have immediate effects in the real world. Accordingly, funding agencies, universities and industry have an enormous appetite for both empirically and theoretically motivated inquiry into machine learning.

[Of course, the rapid advance of machine learning presents many new challenges. One purpose for founding this blog is to address the social impacts of machine learning on the world, e.g. via technical unemployment or algorithmic decision-making. However, in this post we address specifically the spread of information/misinformation about AI and not the effects of the AI itself]

Complicating matters, the wave of enthusiasm for / interest in machine learning has spread far beyond the small group of people possessing a concrete sense of the state of machine learning. Both in industry and the broader public, many people know that important things are happening in machine learning but lack any concrete sense of what precisely is happening.

A Perfect Storm

This pairing of interest with ignorance has created a perfect storm for a misinformation epidemic. The outsize demand for stories about AI has created a tremendous opportunity for impostors to capture some piece of this market.

When founding Approximately Correct, I lamented that too few academics possessed either the interest or the talent for both expository writing and for addressing social issues. And on the other hand, too few journalists possess the technical strength to relate developments in machine learning to the public faithfully. As a result, there are not enough voices engaging the public in the non-sensational way that seems necessary now.

Unfortunately, the paucity of clear and informed voices has not resulted in a silent media. Instead, the void has been filled by charlatans, bombarding the public with incessant misinformation, much of it spread by opportunists, eager to seize upon the public’s interest.

As I quickly learned as a young PhD student blogging for KDnuggets, articles about deep learning get lots of clicks. Everything else being equal, in my experience, articles about deep learning attracted on the order of ten times as many eyeballs as other data science stories. This has made deep learning especially ripe for exploitation by click-baiters, including journalists in the traditional media who might possess the writing chops but are out of their depth – see the recent Maureen Dowd piece on Elon Musk, Demis Hassabis, and AI Armaggedon in Vanity Fair for a masterclass in low-quality, opportunistic journalism.

As public interest and media mania have grown in tandem, so has my anxiety that the spread of misinformation poses a danger. Here are some of the clues that tip me off that something is seriously wrong:

- Widely circulated lists of the AI Influencers, even as published by companies like IBM that should know better, are populated mostly by people with no discernible contribution (or even expertise) in AI.

- With startling regularity, if I tell an educated person what I do, a question quickly follows in reference to the Singularity, a quasi-religious event with no reliable definition or connection to science that has been prophesied for the coming century and popularized by Ray Kurzweil.

- Despite the increasing familiarity of machine learning in the media, the quality of journalism hasn’t improved appreciably. With notable exceptions, newspapers and magazines of record haven’t risen to the challenge of providing terrestrial coverage on the topic despite the serious potential consequences for readers.

If machine learning had no societal impact, the present situation might not be so alarming. After all, the cartoonish descriptions of string theory and particle physics seem relatively harmless, since developments in particle physics show no sign of impacting free speech or the employment markets in the near future.

For several weeks, I’ve wanted to write a post dissecting the misinformation epidemic but I’ve been stifled by the sheer scale of the problem. To make the topic digestible, I’ve decided to write a multi-part series of posts, addressing the following narrower topics:

THE INFLUENCER INDUSTRY

This post will address the world of AI influencers, some legitimate researchers with outsize influence, others self-designated experts whose influence owes to the Paris Hilton effect, deriving greater celebrity from existing celebrity through a social media feedback loops and TED-style puff talks.

THE PROPHETS (Profits?) OF FUTURISM

Here, I’ll address the outsize coverage garnered by futurists. The most widely covered ideas, many inconcrete and unfalsifiable, have the flavor of mysticism. And yet, wrongly, they are frequently given a seat at the table alongside more sober reason, absent qualification. I plan to take a critical look at these ideas, e.g., the Singuarity, Ancestor Simulations, and their coverage in social and mainstream media.

THE FAILURE OF THE PRESS

In this piece, I’ll analyze the mainstream press’s attempts to write about machine learning. I will try to distill the common failure modes (e.g. failing to distinguish between real technology and fictional technology, overstating the brain-like nature of neural networks, and injecting expert opinions despite lacking expertise).

THE PERILS OF MISINFORMATION

Finally, I plan to analyze the various dangers presented by this misinformation. Namely, if people are unable to understand the complex phenomena impacting their lives, then we cannot reasonably expect politicians to argue about the topic clearly, or for voters to make informed decisions. Absent broad (and clear) understanding of the state of machine learning research, we cannot expect a democratic society to regulate the use of machine learning or to manage the economic consequences reasonably and appropriately.

When talking about the “singularity”, please distinguish between the three things people mean when they say that word (see here: http://yudkowsky.net/singularity/schools/). All three deserve to be criticized as strongly as possible, but some are much harder to criticize than others

Nice of him to present the motte and bailey forms on the arguments.

Second. I’m not exactly allergic to the Yudkowsky flavor of “””singularity””” like I am the Kurzweil one (which is what the author seems to be addressing? can’t tell); I *love* reading thoughtful and informed criticisms of the Yudkowsky flavor, but *nobody seems to be even capable of doing this*, they just smear them all together into the same sludge from a position of confident ignorance and point and laugh at the dumbest nearby stereotype.

I don’t have much hope that our author will manage to produce well-informed criticism of Friendly AI/etc. thinking, but I do have a little.

> I find it very annoying, therefore, when these three schools of thought are mashed up into Singularity paste. Clear thinking requires making distinctions.

> But what is still more annoying is when someone reads a blog post about a newspaper article about the Singularity, comes away with none of the three interesting theses, and spontaneously reinvents the dreaded fourth meaning of the Singularity:

> “Apocalyptism: Hey, man, have you heard? There’s this bunch of, like, crazy nerds out there, who think that some kind of unspecified huge nerd thing is going to happen. What a bunch of wackos! It’s geek religion, man.”

These extrapolations of past trends to predict future events don’t make any sense in the context of complex (and presently unknowable) questions like what consciousness is and whether machines will become conscious. Its like people in London claiming the city would be 10m deep in horse shit if the rate of expansion of number of horse drawn carriages kept up its then growth rate. It annoys me because of the implicit intellectual arrogance based on incredibly weak assumptions. I get the sense that at the heart of it all does lie a kind of god complex on the part of people like Kurzweil.

Thanks for this post, and hurrah for any efforts exposing bullshit.

I disagree with your stance on the Singularity here:

“the Singularity, a quasi-religious event with no reliable definition or connection to science that has been prophesied for the coming century”

Technological capability is accelerating at an accelerating pace. It’s a double exponential, readily observable in the price performance of many scientific fields based in information technologies. http://www.singularity.com/charts/page17.html Newer, more comprehensive data can be found in Kurzweil’s recent presentations.

Try to describe, in the least esoteric terms possible, what this means for humanity in the short to medium term. It will still be a meaningful and important idea.

Statements like this are part of the problem: “Technological capability is accelerating at an accelerating pace. It’s a double exponential, readily observable in the price performance of many scientific fields based in information technologies”.

This does not mean anything. What precisely is doubly exponential? *Technology* is not a quantity. Not surprisingly, you support this nebulous claim with a reference to the singularity.com homepage. I hope the religious flavor of this argumentation is not lost on you.

It’s exactly what I said. The acceleration is accelerating, eg. W = W_0 * exp(C_1 * exp (C_2 * t)

where W is directly proportional to a technological capability such as computation, (calculations per second per fixed dollar).

The raw data and primary sources are in my cult’s, I mean my religion’s holy books, I mean, well they’re here: http://www.singularity.com/charts/page67.html

)

How come that data stops in 1998? Are there no statistics on computer performance in the last 18 years? Well single threaded performance, which the table you post shows, has already hit a brick wall. It’s going nowhere. Moore’ Law is still serving us well, but every other technology we have ever had has eventually reached a plateau. Jet engines, internal combustion, rockets, nuclear power. We exploit the physics up close to its physical limits then there’s nowhere left to go. In fact most of the technologies in use today are only gradually improving. Has technology in the last 10 years really improved more than in the previous 20? 10 years ago I was using a dual core 24″ intel Mac that wouldn’t look out of place today. 30 years ago I was using a machine running CP/M and I’d never even seen a GUI.

https://www.karlrupp.net/2015/06/40-years-of-microprocessor-trend-data/

https://www.flickr.com/photos/jurvetson/31409423572/

It seems sensible to think about what opportunities and risks might come if these trends continue as far as the laws of physics will allow.

Now that you mention it, I’d be interested in learning about the current state of the art in turbomachinery. There’s a lot that must be possible today that wasn’t possible 20 years ago.

Technology will dramatically reshape the future in ways that cannot be anticipated. That’s true. But arguments that refer exponential “technology capability” (especially those that invoke Moore’s law) always elide the fact that, by and large, we still have no idea what we’re doing in AI. An infinitely fast CPU would scarcely help, and our current heuristics for the kind of computation we would like to achieve—our own brains—are barely intelligible to those at the forefront of research.

I don’t want hand-waved promises. I want facts. I want a roadmap of advances. I want to know what problems are on the cusp of being solved, and how they affect the ultimate of GAI. And if you believe the Singularity is surely impending but can’t offer me anything beyond graphs carefully plotted along entirely arbitrary axes, then yes, it is a quasi-religious faith.

An infinitely fast CPU would be incredibly helpful! We could use a simple Bayesian/probabilistic approach to brute force our way through many obstacles.

I think you got carried away 🙂

I share your distaste for hand waving. I can’t speak for Singularitarianism but this is the extent of my beliefs:

It seems more likely than not that these trends will continue as far as the laws of physics will allow, and that a growing percentage of human endeavor is becoming based on information technologies. I see these capabilities (calculations per second per constant dollar, base pairs sequenced per dollar, etc.) as enabling, ie. they help us create and understand things that were previously impossible. Therefore, I’d be embarrassed if we don’t make significant advances in the improvement of human condition relatively soon. I’d be aggravated if we overlook the risks.

I think many people who have thought about the implications of accelerating change are just interested in building useful stuff.

Of course everybody wants facts about the future. That would amount to clairvoyance. If this is indeed your requirement for talk about the future to not be “quasi-religious faith”, then maybe you should consider to stop talking about the future at all. And let rest of us have fun with our faith please 😀

There is a logical fallacy here. The idea that “technology advances rapidly,” leading to “the singularity” or anything similar. This argument requires that technology becomes self-aware, and knows how to self-improve at an exponential, or otherwise very rapid rate, to infinity, or a very powerful limit. To demonstrate this fallacy, let’s assume you actually are an android, or a simulation (who knows, you might be). Let’s assume god, or the simulator, or a brain surgeon gives you the control to self-improve. Could you do it? No, we don’t even understand how we work, and can’t recreate what we are in machines we build, so even if you had those controls, you wouldn’t be able to do the infinite self-improvement thing that results in you becoming the singularity. Fear of the singularity is superstitious fear of the unknown, and I’ve never seen any rational argument supporting it.

The past does not dictate the future. The “double-exponential” trend might not and does not continue.

I think it’s fair to say that an increase in raw computational power does not necessarily mean we are any closer to AGI. There are probably a lot of problems in the development of AGI which need direct application of the human mind to solve. This progress does not happen on its own — it’s researchers like Zachary who actually cause this progress on the ground.

I think it’s similar to how building a reusable rocket is extremely hard despite the fact that we already have the necessary resources available to do it. But only now are we making a modicum of progress on that front.

You do know that Moore’s law (the thing you’re basing all your claims on) is drying up, right? Those advancements that you see plotted on those graphs are mostly because over that time, we got better and better at manufacturing semiconductors on higher-res nodes – have a read through this:

https://en.wikipedia.org/wiki/Semiconductor_device_fabrication

Those data points you reference are nice and all, but they don’t reflect the reality that the driving force of Moore’s Law – the jumps in the node size of the manufacturing process – is almost over – we’re now on 14nm, and 5nm is pretty much the limit, which means that around 2020, this whole thing will dry up.

Then, there’s still architectural improvements that can help make progress, optimizations, maybe someone will figure out a way to go lower than 5nm – but nothing near the explosive growth in computational power we’ve seen these past few decades.

Is this written in your holy books?

Yes, it is.

The current paradigm in computational systems, Moore’s Law, will run out of steam. This will be the fifth time that this happens, after electromechanical relays, vacuum tubes, and discrete transistors.

“When Moore’s Law reaches the end of its S-curve, now expected before 2020, the exponential growth will continue with three-dimensional molecular computing, which will constitute the sixth paradigm.” Kurzweil, Ray (2005-09-22). The Singularity Is Near: When Humans Transcend Biology (Kindle Locations 1370-1371). Penguin Group. Kindle Edition.

… and there was much rejoicing.

My kingdom for an edit button. I missed a comma:

“electromechanical, relays, vacuum tubes, and discrete transistors”

I completely agree with Zac here.

I would be very very careful to extrapolate growth. There are physical limits than you cannot overcome, and, more importantly, things that are so complex to both describe and calculate that for example the number of bits to process the problem would be only writable on a storage medium as large as the universe. good luck.

There’s no edit button, so here is what I meant to say in this sentence:

“[…] readily observable in historical data of performance measures of technologies in different scientific fields based on information technology”

Also: More recent data https://www.flickr.com/photos/jurvetson/31409423572/

I think we can all appreciate that you’re an academic scientist with a focus on machine learning. But – no offense – it’s obvious you’re making a mountain out of a molehill here.

Are there some misunderstandings among the broad public and among journalists about machine intelligence? Sure. But is there an “epidemic of misinformation”? Hardly.

If you read mainstream media coverage of Ai, you might be pleased to see how consistently journalists make the distinction between narrow AI and general AI. And that really is the only distinction necessary.

Does narrow AI pose a technological unemployment threat? Of course it bloody does – just look at the millions of drivers who are staged to be out of work thanks to self-driving cars.

Is general AI an existential threat? Of course it bloody is, and you don’t need to read Nick Bostrom’s book to understand why.

So your concerns about “misinformation” are misplaced, because 1) the media generally *does* distinguish narrow and general AI; 2) narrow AI is every bit as big of a threat to employment as people fear; and 3) general AI is an existential threat and neither you nor anyone else has the slightest clue how far away we are from cracking it.

I think we agree on many points but I flatly disagree with you about the extent of the problem. It’s not a “molehill” – it truly is out of control. I’d say it’s at the point where the amount of misinformation is far larger than the amount of good coverage. There are many notable exceptions. Jack Clark (formerly Bloomberg, now OpenAI) and Katyanna Quach (Register) are notable examples of writers who lucidly describe developments in AI. Cade Metz (Wired) has gotten better. But the majority of the coverage is rubbish.

In my eyes, the situation is reminiscent of the news’ failure to adequately cover the derivatives market prior to the financial collapse. Even after it happened, they struggled to explain what had happened. Of course, it’s not easy. Most people with a decent grasp of the machine learning techniques receive enormous salaries. So both a) cultivating and b) retaining competent journalists with the tools to provide engaging but terrestrial coverage is difficult. Nevertheless I believe it’s essential.

I enjoyed reading the article as well as the comments. Thanks Zachary. If you compare this situation to derivatives and the financial market collapse, what are the negatives of spreading misinformation about AI? I’m not seeing your perspective or how the analogy works yet.

The stakes a really high. After “experts” as Elon Musk and Stephen Hawking which for their opinion is easy to see they don’t fully understand the subject, but they are “recognized genius” and they even ask for a ban on this kind of research and to add government oversight…. As pointed out by the OP, this idea of the singularity is nothing but fiction, and very accurately said is just a religious idea, nothing really scientific about that. But very pervasively present on the pop culture, and the voters. They can actually ban or influence so dramatically this field.

And this folks, is precisely why we need to address this issue. I don’t think Stephen Hawking ever asked for a ban on AI research, but rather issued a warning about autonomous weapons powered by AI. But of course, the media then fully capitalised on the statement by Hawking and blew it out of proportion with click-bait titles (beyond which most people don’t read anything).

This is why we now have people like the guy above, ironically, talking about it on this article.

The negatives are numerous. One is that people focus on the wrong problems, e.g. sentient AI, and are oblivious to the concrete problems including (i) discrimination in algorithmic decision making (ii) technical unemployment (iii) ceding control to mindless recommender systems that optimize only clicks (think Facebook’s fake news problem).

I can support that. As a consultant I increasingly face inflated expectations by customers regarding real-world AI application. The inflated expectations are a direct consequence of pop-science media coverage.

Why don’t we just build an AI reporter to cover AI? I’m sure Skynet would tell us the truth 😀

Well, you told us what you are going to tell us but you haven’t said anything yet. I look forward to the time when you say what you are going to say.

Thank you in advance for “weighing in”.

Valid comment until the very end. Being a ML Phd candidate and having perused the state of the art papers in the field, he probably has a ‘rough sense’ regarding how far we may be from AGI.

Usually that rough prediction by actual ML/DL scientists tends to be a lot longer than what is actively being portrayed in the media, for the sake of clickbait articles.

I agree with you here. I also do want to see what Zachary has to write about this.

You are right in that there is an appreciation of the difference between narrow AI and general AI and that may be all that we need to know for now. There’s a long way to go from narrow AI to general AI, however narrow AI is improving at a rapid clip. It is narrow AI and its employment destroying capability that we have to worry about now. The most imminent threat is obvious, self-driving vehicles.

Self-driving vehicles is a threat to the status quo and particularly to all those employed in this industry.

I am so looking forward to the benefits of this technology, particularly in electrical vehicles… fewer unnecessary deaths, horrific injuries and stress from human-caused accidents, quieter cities and suburbs, more real-state for parks and people and a more efficient economy.

At the risk of promoting a futurist… this is an interesting read, regardless of how many of these predictions are realized. http://www.futuristspeaker.com/job-opportunities/25-shocking-predictions-about-the-coming-driverless-car-era-in-the-u-s/#/

Bring it on!

I agree with Hhiram. Probably the biggest threat comes from humans building and becoming dependent on more advanced machines while continuing to struggle with the implicit limitations and contradictions of human behavior, thinking and reasoning. I believe Stephen Hawking stated he is more concerned about people accidentally creating a situation which negatively impacts the human race rather than the prospect of machines taking over through AGI.

The biggest casualty in this next phase of technological evolution is likely to be trust. Who do you trust, what do you trust? That’s always been a perennial question, although with advancing narrow AI/ML ‘black box’ processes upon which we are starting to rely, and may ‘soon’ be completely dependent, who will know enough about these systems to assess risk, real or perceived?

If we’re having such a problem with these conversations now, the blog threads in the future may look even more intriguing…

I very much enjoyed reading your article warning about the perils of unprecedented and mostly misguided hype about AI in general and machine learning/deep learning in particular.

I have tried in a couple of posts to do the same, but apparently the storm is too big and the commercialism (democracy?) in a capitalist society trumps (no pun intended!) reason and rationality.

Although I work in AI but in a completely different paradigm (I work in NLU, and I am one of those who believes that data-driven/ML approaches to NLU are futile (see this https://www.slideshare.net/walidsaba/w-saba-fsll-parts-1234)… I might be wrong, but what I know about the subject tells me so).

Regardless of where our niche is in AI, all those working in AI and doing fundamental work should continue to speak out against this craze, otherwise I fear another and more severe AI Winter

Thank you for your article. I think you are absolutely right. I see a lot iof marketing around AI which diplomatically spoken questionable. It leads to false tracks and people believe the end of the world as they know it is near. This is plain bullshit und it is good that someone is brave enough to dig into it.

Correct me if I am wrong but latest research around neuro biological findings reveal that our brain might be best compared with a quantum computer which we are effectively not able to build yet (a quantum computer which have a life span measured in seconds isn’t a real one for me). So we simply lack the hardware to have singularity. Notbto speak of relevant software. Neural networks of today must be designed for specific purpose or problem space and trained with relevant data. This is the biggest problem. With big data we might get the chance to manage huge amounts of data to train complex neutral networks. But this is no guarantee for success.

Hello. I confess this is not an area in which I have any experience. My own background is in a more well-trodden (and I daresay dull) profession, but that gives me another perspective on the problem.

A lot of your concern seems, justifiably, focused on wildly inaccurate, misleading, or misfocused journalistic coverage of AI.

This is a problem that impacts every area of expertise. Journalists are, for the most part, generalists rather than specialized correspondents on a particular area. Of course, there are some exceptions: sometimes you see journalists with medical backgrounds or finance backgrounds, for example. But for the most part this is not the case (and even where there is a degree of specialism, that is no guarantee of quality). I guess journalists don’t have the time or resources to become experts, or even particularly conversant, on all the different topics that they cover.

As you note, interest in AI is increasing. I suspect the defining feature of the issue you are experiencing is, in fact, as follows:

1. AI is not a special victim here, but is receiving more or less the same level of journalistic rigor as, say, natural sciences, economics, law, or medicine.

2. Press coverage of AI is only recently reaching the ‘critical mass’ where experts in the field can see (and despair at) the poor standard of journalistic reporting.

The reality is that, in many specialisms (and indeed entire industrial sectors), the poor standard of journalism is a fact of life to which people are already resigned. As one person I known frequently observes, it’s only when you see an article on something you know about, that you realize how inaccurate everything else probably is.

Haha, amen to the last sentence!

I have held for years the same idea you express in your comment.

It is a statistical impossibility that every time I consume some reporting on a subject that I am familiar with are the only instances that the reporters have no idea what they are talking about. Thus goes journalism. Having a full appreciation of the subject is the exception.

That puts this entire post in the proper context in my opinion.

This is a very good point and certainly needs to be taken into account by Zachary. If you take a step back you can see how much generalism occurs in media reporting on every industry. It is always unfortunate when it damages promising and emerging technologies however.

The author might be right there, but no quantitative analysis is provided. Would also be nice to see it in comparison to other “Missionformation” with potential social and political impact.

Ditto!

I am hoping his later blog posts will delve into the detail he has alluded to. As a machine learning amateur myself it would certainly be good to have a critical analysis of popular reporting!

I think it’s fair to wait for the entire posts by Zachary on his analyses in which including those FOUR main themes as described in the article above, before we questioning, agree/disagree, or rebutting the author’s authority for analysing and writing all this up. However, I couldn’t agree more with Zachary for what his intention is to shape a discourse of AI in the real sense of AI (by which it means deep understanding, not superficial) so that the world would have an informed society, as we know how imperative this task in the upcoming future.

Thank you for the post and the upcoming editions. You used the word outsize in three places. Did you mean outside in each?

Thanks for the note. No, I do not mean outside. I mean disproportionately large.

I personally believe you wrote a great article and pointed out a large flaw in media and most information sources in general.As well as the publics often irrational interpretation of information.Flawed by design.

“AI Misinformation Epidemic”? https://www.reddit.com/r/artificial/comments/61xkgv/the_ai_misinformation_epidemic — sent me here to read all about it. Please let me tell y’all that yesterday at http://ai.neocities.org/perlmind.txt I uploaded a potentially immortal artificial intelligence that could put a stop to the epidemic.

interesting immortal AI you have there …

Oh my god, these comments.

“Technological capability is accelerating at an accelerating pace. It’s a double exponential”

“that a growing percentage of human endeavor is becoming based on information technologies.”

“Nick Bostrom”

“neuro biological findings reveal that our brain might be best compared with a quantum computer”

Hey rdp,

Sorry for my post full of typos but there several sciences sources about it which popped up in November 2016:

https://www.quantamagazine.org/20161102-quantum-neuroscience/

If this proves to be right it explains why humans could be capable of so much more. But not everyone is a genius. What happens to those workforces who can be replaced by automation or digital transformation (to use marketing buzz words)… Amazon failed this week with its full automated supermarket (https://www.wsj.com/articles/amazon-delays-convenience-store-opening-to-work-out-kinks-1490616133) …. There is a danger that the uninformed held AI responsible for that kind of development…

Thank you for taking the time to reply Zaplatynski.

I included the quote from your post because like AI, quantum is another term that is abused to misinform people. People like Deepak Chopra have created entire careers obscuring nonsense with the word quantum.

My intention is not to pick on you specifically, but do you know the difference between a quantum computer and binary one? Are you familiar with the von Neumann computer architecture and it’s implications? Have you looked into the refutations of Penrose trying to apply Godel’s theorem to describe cognition?

My point is that Lipton’s critique of AI misinformation is applicable to many fields. His arguments can’t be countered by misinformation from other fields.

Thanks again for taking the time to respond.

I think it’s perfectly fine for futurists and transhumanists to extrapolate from present trends and speculate about the future. I like what Carl Sagan said in his magnum opus: “We wish to find the truth, no matter where it lies. But to find the truth we need imagination and skepticism both. We will not be afraid to speculate, but we will be careful to distinguish speculation from fact.”

I also think that it’s OK for people to be religious about things. In fact, I think the bigger problem is not recognizing the boundaries of science and religiosity. Right now you are making a religious argument. You are trying to passionately persuade people about why imprecise thinking about technological trends may cause people to jump to unwarranted conclusions. But to persuade others of this claim, you must reach beyond science. You must appeal to some sense of morality. You must include words like “should,” while science can only provide facts and findings.

Scoping and framing the discussion about technological trends is important, but it’s also important to recognize the difference between long- and short-term thinking. Kurzweil’s law of accelerating returns is a useful observation about long-term increases in complexity and capability. It would be unwise to attribute too much precision to the principle, but it is still useful. Making predictions and decisions about the future is not the same as well-organized painstaking scientific research, but both endeavors have their uses.

Great comment.

Agree. We need to approach each side of the debate with with a balanced cost-benefit analysis. Use each approach to extent its benefits outweigh the costs.

Hey rdp,

As computer sciencist I know von Neumann of cause but the other I am no expert. The difference between a normal computer and a quantum one is like (e.g. Bad comparison but close enough for me) the difference between finit state and non-finit-state machines. The later we can not build because we lack of massive parallel computations as expected from quantum computers. Any way my interest is enough to conclude that we have not the hardware (yet). So Jonny Depp’s movie Transcend is pure fiction.

Yes I agree with Lipton, he has good points. But I would go further since AI is hyped, regarding marketing misused (I see people calling stuff AI supported where I can not see AI but it seems to sell) and not understood by a broad mass it may end up like the witches at end of the middle age (witch hunt is not happened in Europe in the middle ages but after the period we call middle age). So I see a chance where politicians ban AI not only because it could make people unemployed but also like it is “evil technology” (a tool is always neutral). By the way not AI makes unemployment at the end but greedy people who try to live of the rest ofvthr population.

After working with computers for over 4 decades I too am very concerned with the fear mongering surrounding AI and see a real danger in the fear mongering itself. It can lead to public outcry at what could be very beneficial uses for AI out of fear, and will provide cover for AI engineers who can cause a catastrophe.

If interested I have written a short essay expressing similar thoughts about this subject.

https://www.linkedin.com/pulse/pulling-killer-cyborg-rabbits-out-hat-john-smith

I applaud Zachary’s attempt to call attention to the problem. Misinformation, exaggeration and fear-mongering around AI — much of it based on thematic elements borrowed from Terminator movies, for goodness sake! — is anything but harmless. Just look at the impact alarmist websites and conspiracy theories have had on consumer acceptance of GMO crops, the claimed dangers of which are rarely supported by sound scientific studies. Same point with respect to the anti-vaccine crowd, who confuse anecdotal evidence — almost all of which fails to establish anything other than statistically irrelevant correlation — with fact. Frankly, in a world in which “fact” seems to be just another word for opinion and ignorance is no barrier to pronouncement, I can take comfort only in the knowledge that validated facts are true whether you believe them or not!

Here, Here!

The singularity is a quasi-religious event with no basis in reality? Are you talking about the singularity of the Big Bang? And if so, what on Earth does it have to do with religion, and how on Earth has it no basis in reality?

No, I am not talking about the big bang.

We are learning more and more about human brain structure, firing of neurons, dendrites, synapses, and so forth, but we do not have the vaguest idea of how all this physical activity transforms into thought: into a Shakespeare writing, “To be or not to be…” or “Put out the light and then put out the light …” and a Wordsworth writing, “Then my heart with pleasure fills…”

We train neural nets with zillions of examples and empirical data. Show Wright brothers zillions of birds, or dragon flies, or houseflies, and expect them to learn to “fly” using aerodynamics. It was Reverend Wright, their father, who said, if God intended man to fly, He would have given him Wings! The reverend was thinking like legendary Icarus.

If Human intelligence lies in memory recall or seeing patterns in massive amount of data, it will by definition be augmented by AI, Silicon implants, “Carbon copies.” But if human intelligence is exemplified as (1) Faraday’s noticing induced magnetism and dreaming up the concept of “field,” or as (2) Herz noticing simultaneous sparking across the room in gaps of not-connected wires when connected wires sparked this side, and postulating “electro magnetic waves,” or (3) Fitzgerald and Lorentz imagining a kid bouncing a ball in a railway coach against the front panel versus side panel and concluding space contraction and time dilation in relative motion, then Singularity, however defined seems farfetched. Will we get there some day is at best “religion” or faith. We have to first find how neural activity creates thought–not just any thought, creative thought. I have to still see a book by a Nobel Laureate that would teach me how to think Nobel-winning thoughts!

As a doctoral student at MIT in 1967-1970, I had long discussions on these ideas with Prof. Mc Culloch (who with Pitt started it all). Unfortunately his passing away in Sept 1969 changed my history. The philosophising halted. I drifted into Economics with Profs. Samuelson and Solow (both Nobel Laureates) after working with Prof. Marvin Minsky for a little while.

God you guys are whiny. If you’ve come into the world recently and are less than 45 years old, part of the reason you’re astonished at the treatment of the field is because you weren’t here for the earlier part of it-

I was in graduate school doing AI research many years ago, and I saw it first hand- AI originally presented grand ideas; “We’ll be able to beat chess grandmasters in 5 years! We’ll have universal translators in the next 10 years! We’ll have a machine that can pass the turing test by the end of the decade!”… and people were throwing grant money at PhDs in the 1970s left and right with lofty promises for like these. That was before people caught on.

You see, for better or worse, after failing to deliver decade after decade, AI became known as snake oil; a foolhardy Computer Science money pit that only belonged in academia. (For the kids, snake oil is defined by google as “a product, policy, […] of little real worth or value that is promoted as the solution to a problem”). At the time, besides enduring the usual mockery that came from other CS disciplines for AI being pie-in-the-sky and generally useless, (such as from high performance computing people, information theory people, OS people, etc), you had the same nonsense about being asked about Westworld robots (kids – there was a movie in the 70s, before HBO or cable tv existed, it’s not bad, check it out) and later Terminators. By the time I finished my degree I was so disgusted with the level of… well, not straight up quackery but at least academic dishonesty with known capabilities, that I left that area of computer science and never went back.

So don’t be surprised when the press abuses you, because congratulations – you took up the same field the last generation screwed up for you -“We can dO TEH ANYTHING!”. So the assumption is why not crazy killer robots too?

No one’s going to give you the benefit of the doubt this time, it’s up for you to push the boundary to produce something meaningful – better than our old learning chess programs and pathfinding, (A* oh boy!). Now you can do such fantastical things as… search the web! (WOW!!) balance a robot on two feet! recognize a cat meme! and… drive a car. sorta…

And as for the Turing test? Well, someday. Don’t promise it, and people will respect you more, (and you won’t look like a damned fool later). As long as people are talking about your field, it’s a win. Vanity Fair did an article? Excellent, more exposure means more questions (and money) will come.

But quit whining; it’s pathetic. You’re partially defining the future of humanity. Try to act like it.

I think this is a misguided reply. The misrepresentation is not problematic because it harms AI researchers. To be frank, in the short run, in many ways it helps researchers: high salaries from eager startups, professors poached from academia creating opportunities for young talent, etc. I don’t feel bad for them (us).

The misrepresentation is problematic because it misleads the public. The difference between now and last time (pre-winter) is that then machine learning was just an idea. We didn’t have machine learning driving cars on the road (in a serious way), we didn’t have algorithms curating the news. So the misinformation wasn’t wasn’t so problematic with respect to social welfare.

Hi Zach,

good post, we really needed it! I’ll try to translate large part of it, or, at least, writing a large summary of the post in Italian. Would you mind if I do? Please, let me know!

Best,

petrux

—

Yes, of course. Please link to the source and let us know when it’s up. Thanks! 🙂

Zach

I look forward to your article. I am sure you know you poked the bear by not showing due reverence to his holiness Kurzweil! I bought one of his books and couldn’t get past the first chapter: it is such unscientific garbage I find it amazing anybody takes him seriously.

I was force to sit through a presentation using his slides which showed something like “paradigm shifts” and which started with the Big Bang, included asteroid strikes, etc.. It’s like the guy doesn’t know that if you plot things on two axis and draw a line though it you imply causality!

As for AI, there is so much claptrap out there the mind absolutely boggles.

Remember: if you poke Elon Musk (peace be upon him) you’ll catch holy hell!

Good luck

Each religion has its own Messiha, and the techno-religion is no exception. Kurzweil is taken seriously as such a “Messiha” as he came up with several inventions, e.g. first flatbed scanner, the first scanner that converted text to speech, the music synthesizer, and the first commercialized marketed large-vocabulary speech recognition. He predicted that something like Deep Blue would beat a chess grandmaster by 1998. In the early eithies he thought that by the early 200o internet would emerge as a global phenomenon.

Each religion has some kind of an institution, and Google cofinanced the Singularity University.

Each religion has followers. I don’t know exact numbers, but I assume AI as a business generate stable cash flow.

Of course, AI is not just a business. It is in business with practical use cases, (human generated) pitfalls due to bias in models, and people managing expectations according either to their pro bono enthusiasm, or their commercial agenda.

In this context every effort to help those “lost in translation” is helpful. This however should happen without fingerpointing. Good communication is an art, if not a science. I wish there would be more great comms from true experts.

Some of this makes sense. I’d advise caution with boogeyman phrases like “fingerpointing”:

Any critical response could be described as “fingerpointing”, as rhetorical device to escape accountability.

Also – perhaps there is a different standard to which we can hold authors and commenters. I wouldn’t express my thoughts on the topic so viscerally as Brian, but I wouldn’t say it goes beyond what’s fair game for a comment thread.

Hi Zachary,

Refreshing views you hold. Our research group, Computational Intelligence, had a discussion regarding curbing the misinformation you are addressing.

Cheers to taking such a bold stance. We need this in our society, otherwise the Internet through the prophets of misinformation will continue to bombard the society with meaningless and unscientific views.

There is far reaching value in engaging in principled research. I can’t wait to read your subsequent articles.

Thank you,

Mthandeni kaDumisani Langa

PhD student, University of Johannesburg, South Africa

Thanks! Interested to hear the takeaways from your research group’s conversation.

Edit of my first comment:

The rate of technological change is accelerating exponentially. This is readily observable in historical data of performance measures of a wide variety of technologies.

Here is one of the most important trends https://www.flickr.com/photos/jurvetson/31409423572/ in which you can observe a double exponential* growth of calculations per second per constant dollar for the last 120 years. * https://en.wikipedia.org/wiki/Double_exponential_function

Here are some other trends: http://www.singularity.com/charts/page17.html (more recent data are available in Kurzweil’s recent presentations.)

Let’s assume that there’s a 50% probability that these trends will continue insofar as the laws of physics will allow. Try to describe, in the least esoteric terms possible, the implications of these trends for humanity in the short to medium term. I don’t see how religious thinking is necessary for accelerating change to still be a meaningful and important observation.

I’ve said this in the past: Technological innovation is about transcending our current capabilities. That is a powerful notion. It will attract good thinkers and bad thinkers, good artists and bad artists, good engineers and bad engineers.

True, there is no edit button. I suggest reading the comment before you submit. This is a polite spam warning. I will have to go back and delete your comments if you post too many near-identical comments.

Thanks, I will keep that in mind.

On the point of “Failure of the Press”, when you say “overstating the brain-like nature of neural networks, and injecting expert opinions despite lacking expertise”, you need to consider that this is also a failure of the academia. To see a prime example of this, take our colleague Geoffrey Hinton, who refers to the computations done in a Artificial Neural Network as “thoughts”: https://www.youtube.com/watch?v=uAu3jQWaN6E . That is surely a very controversial statement for computer scientists, psychologists, philosophers, and others. But that is the kind of statements that catch people’ attention. Even if they may be taken out of context or used dishonestly (hey, that guy Hinton built a machine that categorizes images by thinking about the properties that define each category!) .

These kind of over statements are all over the place in academia. Just look at abstracts of papers posted on arxiv every day. Or the blog posts from big research groups, from universities and industry. There is a large group of people who seem to be in a competition of who screams the loudest. Perhaps they do it out of conviction. Or perhaps they do it to convince the lay people that what hey do is interesting. Or perhaps it is just to convince the funding agencies and angel investors. In my opinion, the “failure of the press” that you describe is a product of vanity, and possibly greed, in academia.

I agree, academics own a share of the responsibility for this misinformation. One pattern that I’ve witnessed several times: an academic, who would never be so ostentatious in their formal work, will found a startup with a name like BrainMind, perhaps commissioning graphics that evoke brains/neurons, etc.

However, this shouldn’t annul the duty of the press to get to the truth. Similarly, while financiers bear responsibility for the financial crisis, so do cheerleaders like Jim Cramer (CNBC).

Here’s the actual bit:

https://youtu.be/XG-dwZMc7Ng?t=4m11s

yikes.

I skimmed this article and the Vanity Fair article yesterday.

What did you find objectionable about it? It seemed pretty good to me.

I imagine you may be joking, David. In case you’re not… the problems with the Vanity Fair article are many. The piece portrays developments in AI as stemming principally from the personalities of Elon Musk and Demis Hassabis. It fails to qualitatively distinguish scientific developments from the religious prophesies. At a time when AI may have real effects on people’s lives, this article did nothing to clarify what they might be or to paint a realistic picture of what ML actually does.

I wasn’t joking. I strongly disagree with your characterizations of the piece, except: “At a time when AI may have real effects on people’s lives, this article did nothing to clarify what they might be”. But that wasn’t the point of the article, so I don’t know why you’d complain about it.

What do you people think of Peter Naur’s argument that all non computational forms of thought are being surpressed (See his 2005 Turing Award lecture plus http://www.naur.com web site). AI is just using computers for finding patterns in data and taking credit for technological improvements in sensors and actuators.

This argument appears vacuous. I’m not sure there’s enough content here to actually require a response. In short, start by substituting “machine learning” for “AI” since that’s what we’re actually talking about. Then “ML is just using computers for finding patterns in data” isn’t an argument worth discussing, it’s simply regurgitating the very definition of the field. The problem here is the word “just”, which trivializes a tremendously difficult undertaking.